* hacking at dns * hack * hax * start dics! * hacking * feat: docs! --------- Co-authored-by: Truxnell <9149206+truxnell@users.noreply.github.com>

7.1 KiB

Backups

Nightly Backups are facilitated by NixOS's module for restic module and a helper module ive written.

This does a nightly ZFS snapshot, in which apps and other mutable data is restic backed up to both a local folder on my NAS and also to Cloudflare R2 :octicons-info-16:{ title="R2 mainly due to the cheap cost and low egrees fees" }). Backing up from a ZFS snapshot ensures that the restic backup is consistent, as backing up files in use (especially a sqlite database) will cause corruption. Here, all restic jobs are backing up as per the 2.05 snapshot, regardless of when they run that night.

Another benefit of this approach is that it is service agnostic - containers, nixos services, qemu, whatever all have files in the same place on the filesystem (in the persistant folder) so they can all be backed up in the same fashion.

The alternative is to shutdown services during backup (which could be facilitaed with the restic backup pre/post scripts) but ZFS snapshots are a godsend in this area, and im already running them for impermanence.

!!! info "Backing up without snapshots/shutdowns?"

This is a pattern I see a bit too - if you are backing up files raw without stopping your service beforehand you might want to check to ensure your backups aren't corrupted.

The timeline then is:

| time | activity |

|---|---|

| 02.00 | ZFS deletes prior snapshot and creates new one, to rpool/safe/persist@restic_nightly_snap |

| 02.05 - 04.05 | Restic backs up from new snapshot's hidden read-only mount .zfs with random delays per-service - to local and remote locations |

Automatic Backups

I have added a sops secret for both my local and remote servers in my restic module :simple-github: /nixos/modules/nixos/services/restic/. This provides the restic password and 'AWS' credentials for the S3-compatible R2 bucket.

Backups are created per-service in each services module. This is largely done with a lib helper ive written, which creates both the relevant restic backup local and remote entries in my nixosConfiguration.

:simple-github: nixos/modules/nixos/lib.nix

!!! question "Why not backup the entire persist in one hit?"

Possibly a hold over from my k8s days, but its incredibly useful to be able to restore per-service, especially if you just want to move an app around or restore one app. You can always restore multiple repos with a script/taskfile.

NixOS will create a service + timer for each job - below shows the output for a prowlarr local/remote backup.

truxnell@daedalus ~> systemctl list-unit-files | grep restic-backups-prowlarr

restic-backups-prowlarr-local.service linked enabled

restic-backups-prowlarr-remote.service linked enabled

restic-backups-prowlarr-local.timer enabled enabled

restic-backups-prowlarr-remote.timer enabled enabled

NixOS (as of 23.05 IIRC) now provides shims to enable easy access to the restic commands with the correct env vars mounted same as the service.

truxnell@daedalus ~ [1]> sudo restic-prowlarr-local snapshots

repository 9d9bf357 opened (version 2, compression level auto)

ID Time Host Tags Paths

---------------------------------------------------------------------------------------------------------------------

293dad23 2024-04-15 19:24:37 daedalus /persist/.zfs/snapshot/restic_nightly_snap/containers/prowlarr

24938fe8 2024-04-16 12:42:50 daedalus /persist/.zfs/snapshot/restic_nightly_snap/containers/prowlarr

---------------------------------------------------------------------------------------------------------------------

2 snapshots

Manually backing up

They are a systemd timer/service so you can query or trigger a manual run with systemctl start restic-backups-<service>-<destination> Local and remote work and function exactly the same, querying remote it just a fraction slower to return information.

truxnell@daedalus ~ > sudo systemctl start restic-backups-prowlarr-local.service

< no output >

truxnell@daedalus ~ [1]> sudo restic-prowlarr-local snapshots

repository 9d9bf357 opened (version 2, compression level auto)

ID Time Host Tags Paths

---------------------------------------------------------------------------------------------------------------------

293dad23 2024-04-15 19:24:37 daedalus /persist/.zfs/snapshot/restic_nightly_snap/containers/prowlarr

24938fe8 2024-04-16 12:42:50 daedalus /persist/.zfs/snapshot/restic_nightly_snap/containers/prowlarr

---------------------------------------------------------------------------------------------------------------------

2 snapshots

truxnell@daedalus ~> date

Tue Apr 16 12:43:20 AEST 2024

truxnell@daedalus ~>

Restoring a backup

Testing a restore (would do --target / for a real restore) Would just have to pause service, run restore, then re-start service.

truxnell@daedalus ~ [1]> sudo restic-lidarr-local restore --target /tmp/lidarr/ latest

repository a2847581 opened (version 2, compression level auto)

[0:00] 100.00% 2 / 2 index files loaded

restoring <Snapshot b96f4b94 of [/persist/nixos/lidarr] at 2024-04-14 04:19:41.533770692 +1000 AEST by root@daedalus> to /tmp/lidarr/

Summary: Restored 52581 files/dirs (11.025 GiB) in 1:37

Failed backup notifications

Failed backup notifications are baked-in due to the global Pushover notification on SystemD unit falure. No config nessecary

Here I tested it by giving the systemd unit file a incorrect path.

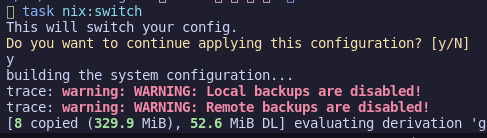

Disabled backup warnings

Using module warnings, I have also put in warnings into my NixOS modules if I have disabled a warning on a host that isnt a development machine, just in case I do this or mixup flags on hosts. Roll your eyes, I will probably do it. This will pop up when I do a dry run/deployment - but not abort the build.